A practical view of Server-Timing in a network waterfall. Images are compressed and cached for fast loading.

Server Timing API for Performance Debugging: 15 Quick Wins

By Morne de Heer, Published by Brand Nexus Studios

If you want to catch backend slowdowns before users do, the server timing api for performance debugging is your best friend. It ships granular timings straight from your server to the browser so you can see exactly where time burns.

Here is the hook. With the server timing api for performance debugging you get instant, zero JavaScript cost insight into database waits, application logic, and cache hits. You can wire those numbers into DevTools, dashboards, and alerts without guesswork.

What is the server timing api for performance debugging?

The server timing api for performance debugging is an HTTP header convention, not a library. Your server writes a Server-Timing header that lists named metrics with durations in milliseconds. The browser parses it and exposes values in DevTools and the Performance API.

Because it is just a header, it is framework agnostic, cheap to send, and easy to audit. You can add it to dynamic pages, APIs, or even cacheable resources like HTML and JSON.

Why this header matters for real performance

You cannot fix what you cannot see. The server timing api for performance debugging surfaces latency where it is created, which shortens time to first byte, stabilizes render start, and speeds LCP for real users.

Backend time often hides under opaque TTFB. With these metrics you separate DB wait from application logic, and edge work from origin work. When your team talks with numbers, decisions get easier and faster.

Better yet, Server-Timing does not require page tags to load. The browser already understands it, so you can iterate safely without shipping heavy scripts.

How the Server-Timing header works

You place comma separated metrics in the Server-Timing header, each with a short name and an optional duration. The server timing api for performance debugging relies on a minimal format that looks like this:

# Example using the server timing api for performance debugging

Server-Timing: db;dur=37;desc="Postgres query", app;dur=82;desc="SSR", cache;desc="HIT", edge;dur=4

The browser parses these entries and shows them under the Network tab. JavaScript can read them via the PerformanceServerTiming interface.

How to implement the server timing api for performance debugging

Node and Express

Many teams start in Express. Add middleware that measures spans and writes one Server-Timing header per request. The server timing api for performance debugging fits nicely into existing logging.

// Express example - server timing api for performance debugging

const express = require('express');

const app = express();

app.use(async (req, res, next) => {

const t0 = process.hrtime.bigint();

// pretend DB

const tDb0 = process.hrtime.bigint();

await fakeDb();

const tDb = Number(process.hrtime.bigint() - tDb0) / 1e6;

// application work

const tApp0 = process.hrtime.bigint();

await fakeApp();

const tApp = Number(process.hrtime.bigint() - tApp0) / 1e6;

res.setHeader('Server-Timing', `db;dur=${tDb}, app;dur=${tApp}`);

res.on('finish', () => {

const total = Number(process.hrtime.bigint() - t0) / 1e6;

// log totals if needed

});

next();

});

app.get('/', (req, res) => res.send('<h1>Hello</h1>'));

app.listen(3000);

Nginx

Nginx can emit static or variable based durations. You can pass upstream timings or literal markers. The server timing api for performance debugging works even when Nginx serves cached HTML.

# Nginx example - server timing api for performance debugging

map $upstream_response_time $st_up {

"~>(?<ms>[0-9.]+)" $ms;

default "";

}

add_header Server-Timing "edge;dur=$request_time, upstream;dur=$st_up";

Apache

With mod_headers, Apache can set the header directly. Keep the number of metrics reasonable so headers stay small.

# Apache example

Header set Server-Timing "app;dur=50, php;dur=30"

PHP

Pure PHP can measure spans around code blocks and output one combined header. This keeps overhead low and gives you precise durations.

// PHP example

$start = microtime(true);

// ...do db...

$db = (microtime(true) - $start) * 1000;

// ...do app...

$app = (microtime(true) - $start) * 1000;

header('Server-Timing: db;dur=' . round($db) . ', app;dur=' . round($app));

Python Flask

Use before and after request hooks to time work. The server timing api for performance debugging gives you visibility without extra JS libraries.

# Flask example

from flask import Flask, g, request, make_response

import time

app = Flask(__name__)

@app.before_request

def before():

g.t0 = time.perf_counter()

@app.after_request

def after(response):

elapsed = (time.perf_counter() - g.t0) * 1000

response.headers['Server-Timing'] = f"app;dur={elapsed:.0f}"

return response

Go net/http

Wrap handlers and write a single header per response. You can add more spans for DB and cache calls as you go.

// Go example

func WithTiming(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

next.ServeHTTP(w, r)

dur := time.Since(start).Milliseconds()

w.Header().Set("Server-Timing", fmt.Sprintf("app;dur=%d", dur))

})

}

Cloudflare Workers or edge runtimes

At the edge, the server timing api for performance debugging is great for measuring transform and cache steps. Add a header before returning the fetched response.

// Workers example - server timing api for performance debugging

export default {

async fetch(request, env, ctx) {

const t0 = Date.now();

const resp = await fetch(request);

const dur = Date.now() - t0;

const newHeaders = new Headers(resp.headers);

newHeaders.set("Server-Timing", `edge;dur=${dur}`);

return new Response(resp.body, { headers: newHeaders, status: resp.status });

}

}

Naming, values, and meaning

Choose short lowercase names. Common ones include db, app, cache, edge, upstream, auth, and render. The server timing api for performance debugging expects durations in milliseconds in a dur key.

Add desc for human readable labels if needed. Avoid PII or secrets in names or descriptions. Keep headers under a few hundred bytes to avoid truncation.

CORS and data access

If your front end reads timing from a different origin, set Timing-Allow-Origin. The server timing api for performance debugging supports cross origin access when you explicitly allow it.

# Add this with your Server-Timing header for cross origin reads

Timing-Allow-Origin: *

For same origin pages, you usually do not need this. Test in DevTools and with curl so you catch proxy issues early.

Reading values in the browser

Once your header lands, open DevTools, select the request, and look for the Server Timing panel. You can also read values in code. The server timing api for performance debugging exposes them through PerformanceServerTiming.

// Navigation entry - server timing api for performance debugging

const nav = performance.getEntriesByType('navigation')[0];

console.table(nav.serverTiming.map(x => ({ name: x.name, dur: x.duration, desc: x.description })));

// Fetch entry

const res = await fetch('/api/data');

const serverTiming = res.headers.get('Server-Timing'); // raw

// or in Chromium you can access performance entries for resource fetches

Visualizing and sharing insights

Plot backend durations next to TTFB so you can see contribution. The server timing api for performance debugging makes dips and spikes easy to spot in dashboards and network waterfalls.

Share annotated screenshots with your team. Pair the numbers with commit hashes or release IDs so you can roll back regressions quickly.

15 quick wins with the server timing api for performance debugging

Start small and stack wins. The list below shows where the server timing api for performance debugging pays off most in real teams.

-

Isolate database slowness from app logic. If db is high while app is low, tune queries or add indexes.

-

Spot cache misses. A rising cache metric means you are not hitting your caches or they need better keys.

-

Prove edge latency. Use edge to measure CDN or worker time so infra teams have real numbers.

-

Guard server side render. Track render so your SSR budget stays under 150 ms on median.

-

Catch third party calls. If auth or payment spikes, you have leverage for escalations.

-

Stop blind TTFB fights. Break TTFB into db, app, and edge so you point fixes at the right owner.

-

Measure cold starts. Compare app on warmed instances versus cold boots to plan capacity.

-

Quantify geographic impact. Edge durations surface far away users that need better routing.

-

Budget per route. Keep /product under a strict app budget and warn on breach.

-

Verify caching headers. If edge is near zero, your CDN likely serves from cache.

-

Validate feature flags. When a new flag adds 50 ms to app, roll back with confidence.

-

Benchmark before and after upgrades. Show execs how backend work actually dropped.

-

Harden incident response. Use consistent names so on call engineers see the same map every time.

-

Feed RUM. Log metrics alongside user timings to study impact on behavior.

-

Train new hires. The header is a living sketch of your backend pipeline.

Core Web Vitals, SEO, and business impact

Better backend times cut TTFB, which improves LCP. The server timing api for performance debugging shortens the critical path and gives SEO a lift through faster perceived load.

Use these metrics to set route budgets and track them alongside Core Web Vitals. When LCP drifts, check whether db or app has crept up and fix the right layer first.

If you need help aligning performance with growth, explore our SEO services. Faster sites rank better and convert more.

Governance and naming conventions that scale

Set a naming policy. The server timing api for performance debugging works best when every service uses the same names. Document db, app, edge, cache, and rules for adding new names.

Review header size quarterly and remove stale metrics. Keep budgets in your repo so they ship with code.

CI, budgets, and automatic alerts

Add synthetic tests that fetch key pages, parse Server-Timing, and fail the build if a budget is breached. The server timing api for performance debugging turns performance into a first class check like unit tests.

Send RUM samples to your metrics stack and alert on p95 and p99. Roll trends by route and geography so you catch regressions outside the lab.

Privacy and security

Treat Server-Timing like any public header. Never include user IDs, SQL, or secrets. The server timing api for performance debugging should use generic names and short descriptions only.

Sample on high traffic pages to control header bloat. You can also emit only on error rates or for logged in users if that suits your policy.

Troubleshooting and gotchas

Header not visible in DevTools. Check that proxies or server frameworks do not strip custom headers. The server timing api for performance debugging depends on a literal Server-Timing header.

Values look wrong. Ensure you measure only backend work and not client time. Validate units are milliseconds and types are numbers.

Header too large. Trim descriptions, shorten names, and reduce metrics. The server timing api for performance debugging should remain lean so it does not inflate response size.

Page speed synergy: caching, compression, and images

Use CDN caching and origin caching to remove redundant work. Pair that with Brotli or gzip to squeeze bytes. The server timing api for performance debugging shows the savings clearly.

Compress images and serve modern formats. Lazy load below the fold. All images on this page are compressed and cached to improve page speed and TTFB consistency.

A quick case study

A retail team adopted the server timing api for performance debugging on their product page. They saw db at 120 ms and app at 260 ms, which explained slow TTFB and weak LCP.

By adding a cache key and trimming SSR work, db dropped to 40 ms and app to 110 ms. TTFB improved 2.5x and LCP moved from 3.2 s to 2.1 s on median. Conversions followed.

Operationalizing across teams

Incorporate timing into your PR template and your release checklist. Include a screenshot of DevTools and raw header values for each major change.

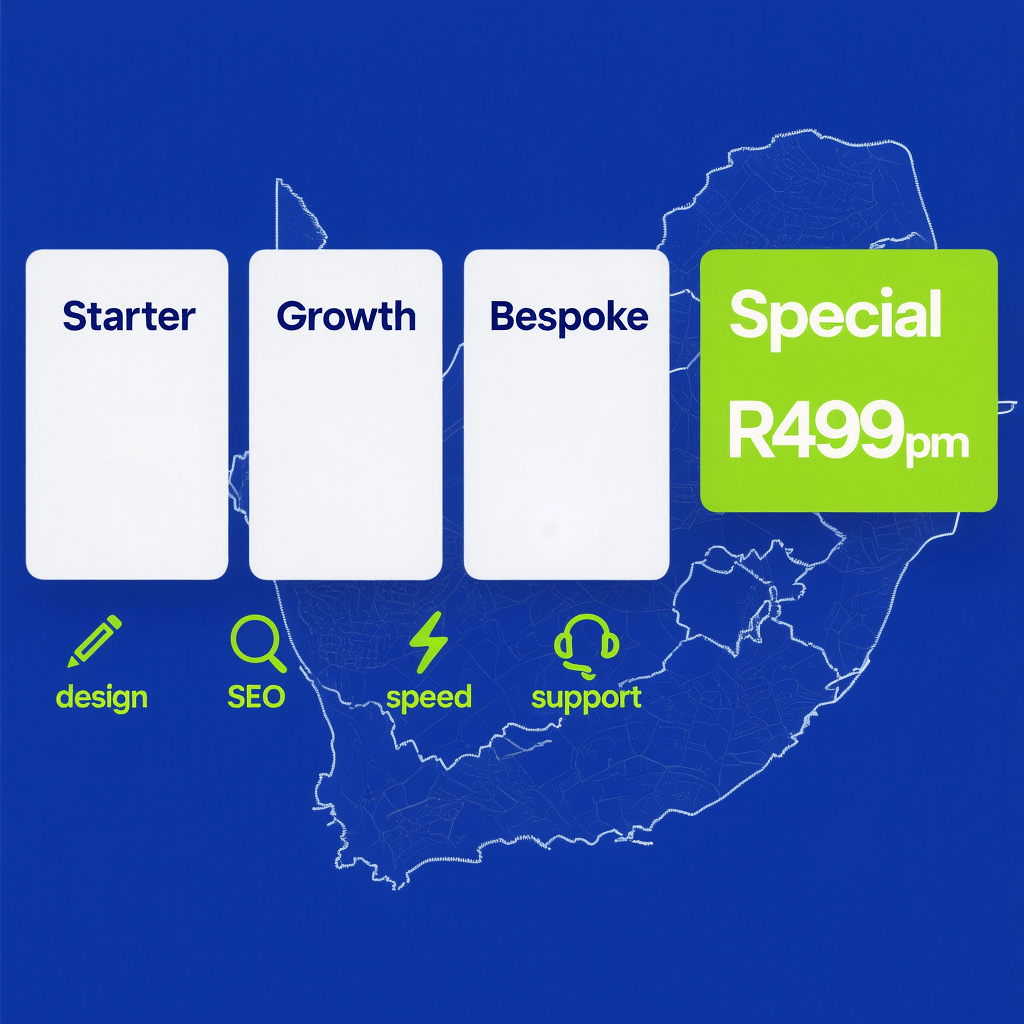

If you want a partner to set this up and keep it humming, Brand Nexus Studios can help integrate timing with your build pipeline and dashboards across environments.

Integrate with analytics and the design process

Instrument key templates and APIs, then feed insights into your design and engineering cadence. When a component is heavy, budget for its cost in the design phase.

Learn how performance ties into clean builds and maintainable front ends with our website design and development approach. Faster foundations make iteration easy.

Close the loop with outcome tracking. Route performance data into your analytics and reporting so teams can see the business effect of backend changes.

Advanced patterns you will actually use

Composite metrics

Emit app as the sum of several spans when you cannot send all details. The server timing api for performance debugging still gives you a single budget to track.

Conditional emission

Send full details on 1 percent of traffic and a short header on the rest. This keeps overhead tiny while preserving rich samples for analysis.

Edge plus origin split

Emit edge at the CDN and app at the origin. When TTFB grows, you immediately know which side owns the delay.

Per route budgets

Set stricter limits on critical money pages. Dashboards should group by route as a first class dimension.

Checklist for production readiness

- Metrics defined and documented

- Server-Timing header emitted on all target routes

- Timing-Allow-Origin configured where needed

- CDN and proxies pass through the header

- DevTools verification screenshots attached to PRs

- RUM collection wired and sampling configured

- Budgets set and alerts in place

- Privacy review complete

FAQs

What is the server timing api for performance debugging? It is a header that sends backend timings to the browser so you can see where time is spent and fix slow code paths.

Do I need a library? No. You write the Server-Timing header directly. Many frameworks already expose hooks to do this quickly.

Will this slow my site? The header is small. The small CPU you use to measure spans is offset by faster diagnosis and fewer wasted requests.

Can I use it on APIs and SPAs? Yes. Add it to API responses and read with fetch. Use consistent names so dashboards are easy to query.

Is it supported across browsers? DevTools visualizations vary, but headers work broadly. The PerformanceServerTiming interface is available in modern Chromium and others are catching up.

How do I keep headers small? Use short names, avoid verbose descriptions, and limit to a few key metrics per route.

Where does it fit in my roadmap? Start on the most important pages, then roll out to APIs. Treat it as a quality gate equal to tests and accessibility.

References

Final thoughts and next steps

The server timing api for performance debugging gives you a straight line from problem to fix. Start with two metrics, validate in DevTools, and wire them into your dashboards.

If you want help implementing this cleanly across environments and teams, Brand Nexus Studios can integrate timing, budgets, and dashboards into your workflow so you can scale with confidence.

Enjoyed this guide? Subscribe, drop a comment, or share it with your team. If you have questions, email info@brandnexusstudios.co.za and we will get you unstuck fast.