A clean feature visual that compares throughput and latency across workloads.

WebAssembly Performance Impact on JS: 21 Proven Wins

If you have wondered how big the webassembly performance impact on js can be, you are in the right place. The short answer is that it can be huge on compute heavy tasks, and modest elsewhere. The long answer is that it depends on where you place the boundary and how you move data.

In this guide we unpack what WebAssembly does well, where JavaScript still shines, and how to measure improvements without guesswork. You will get a toolkit of patterns, benchmarks, and shipping tips that make the webassembly performance impact on js both predictable and repeatable.

Tip: Think hybrid. Keep your UI in JS, send the hot loops to WASM, and minimize boundary crossings. That is how you win on speed and simplicity.

WebAssembly in plain terms

WebAssembly is a portable binary format. It runs at near native speed on the web and on servers. You compile from languages like C, C++, Rust, or Zig, then load the .wasm module in the browser or Node.

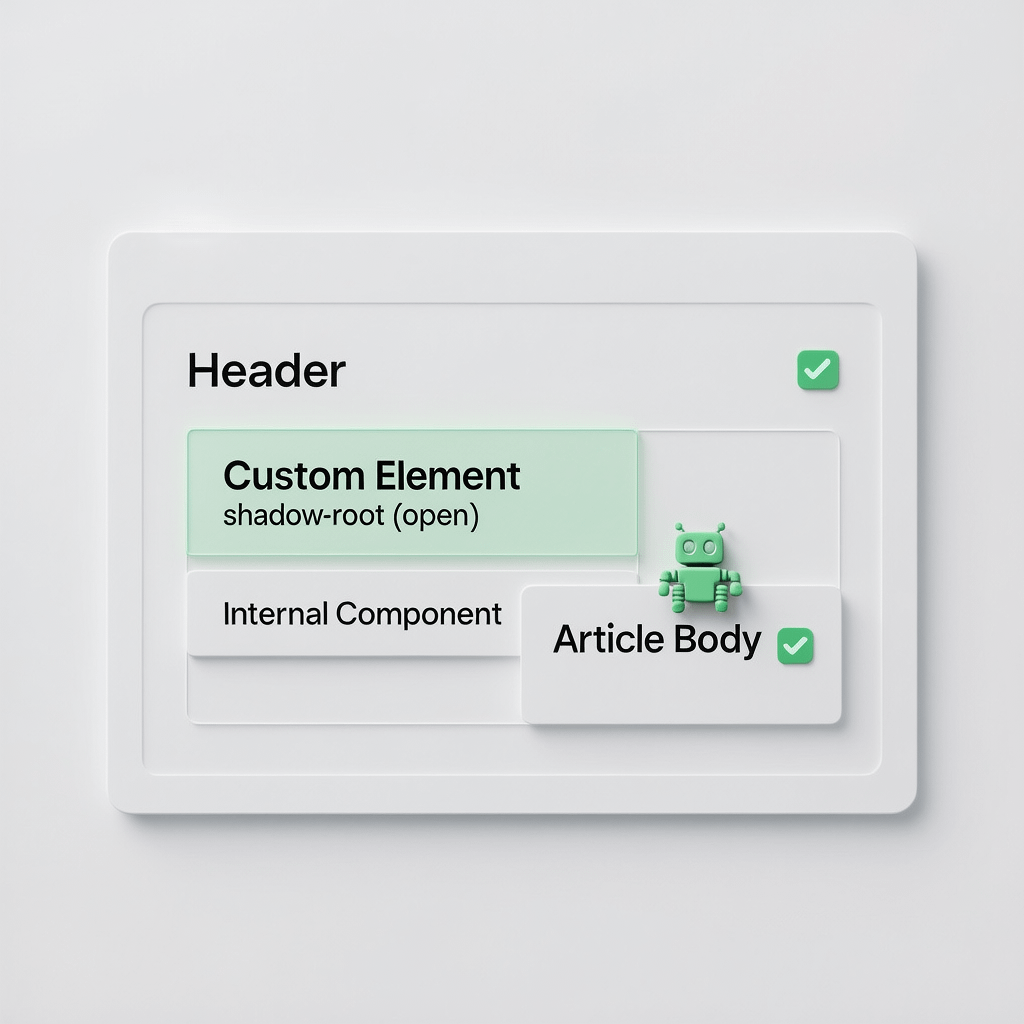

It does not replace JavaScript. You still need JS for the DOM, events, and most application logic. WebAssembly complements JS by handling number crunching and tight loops that strain the main thread.

Where the webassembly performance impact on js is strongest

WASM is a specialist. It wins where CPU time dominates and the output is compact. Point it at work that benefits from typed memory, vectorization, and predictable control flow.

- Codecs and transforms: image resizing, audio processing, video filtering, compression, and decompression.

- Math and simulation: physics steps, finance models, signal processing, or geospatial computation.

- Search and parse: regex engines, parsers, and data scanning over large buffers.

- Pathfinding and optimization: A star, Dijkstra, and local search over grids and graphs.

- Crypto and hashing: checksums, signature verification, and privacy preserving transforms.

These tasks keep hot loops tight and avoid frequent interop. That is the ideal setup for a strong webassembly performance impact on js.

Where JavaScript remains the right choice

JavaScript still owns the UI and the glue. It integrates with the DOM, handles events, and moves data between components with less friction than WASM can today.

- UI orchestration: rendering, routing, and state transitions.

- Lightweight logic: form validation, simple transforms, and formatting.

- Network and storage: fetch, IndexedDB, and local cache housekeeping.

Use WASM for the heavy lifting and JS for everything else. This division keeps your stack flexible while delivering the webassembly performance impact on js where it matters most.

The JS to WASM boundary is the real cost

Calls across the boundary are not free. The runtime must bridge JS objects and WASM linear memory. That means potential conversions for strings, arrays, and complex types.

- Function call overhead: small for numbers and pointers, higher for complex objects.

- String conversion: UTF-8 encoding and decoding adds measurable cost.

- Array copies: boxed arrays and nested structures require allocation and copying.

The fix is simple. Batch your work and pass big buffers. Avoid chatty call patterns. Keep hot loops inside WASM and send back compact results.

Memory layout and data passing that scales

WASM exposes a linear memory buffer. You work with it through typed arrays. This allows predictable access and SIMD friendly layouts.

Prefer flat buffers over nested objects

Store structs in contiguous memory. Use little endian packing and fixed field sizes. This avoids extra traversal and reduces cache misses.

Pass pointers, not payloads

Allocate in WASM, copy input into the buffer once, and pass the pointer and length from JS. Read outputs back with a view that targets the same buffer.

// JS side - copy input into WASM memory once

const ptr = wasm.alloc(input.length);

new Uint8Array(wasm.memory.buffer, ptr, input.length).set(input);

const outPtr = wasm.process(ptr, input.length);

const outLen = wasm.get_output_len();

const result = new Uint8Array(wasm.memory.buffer, outPtr, outLen).slice();Strings only at the edge

Keep strings in JS. Convert to bytes at the boundary. Use TextEncoder and TextDecoder efficiently to prevent extra allocations.

SIMD and threads for serious speed

SIMD executes operations across lanes in parallel. It shines on pixel work and vector math. Modern engines support WebAssembly SIMD on most platforms.

Threads can spread work across cores using SharedArrayBuffer. This requires cross origin isolation headers. It can deliver large gains on parallel workloads when you partition data well.

- SIMD for pixel loops, convolution, and color transforms.

- Threads for chunked processing and map reduce style tasks.

- Atomics for safe coordination with minimal contention.

Test with and without these features. Hardware and engine differences mean your gains will vary. Still, they often amplify the webassembly performance impact on js by a wide margin.

Loading and caching WASM the right way

Fast code needs fast delivery. Treat your .wasm like a heavy asset. Load quickly, compile once, and cache aggressively.

- Use WebAssembly.instantiateStreaming where supported.

- Serve with the correct mime type application/wasm.

- Set long cache headers and content hashing on the .wasm file.

- Consider compiling once and storing in IndexedDB for offline starts.

Keep bundles slim on first paint. Lazy load the module near first use. This balances TTFB, LCP, and the webassembly performance impact on js once the user starts real work.

Node vs browser considerations

In Node you skip the DOM and focus on raw throughput. This often yields cleaner measurements and larger gains. In the browser you balance speed with UI work on the main thread.

Be aware of different JIT strategies, memory limits, and security constraints. Align your toolchain so inputs and outputs match across both environments.

Benchmark without fooling yourself

Microbenchmarks can mislead you. Measure real tasks with realistic inputs. Report distributions, not single numbers. Repeat runs and average results responsibly.

- Stabilize the environment. Disable extensions and background tasks.

- Warm up both implementations to pay compilation and cache costs.

- Run 10 to 30 trials and capture p50, p75, and p95.

- Repeat with different input sizes to reveal scaling behavior.

- Inspect flame charts and long tasks to explain results.

This process reveals where the webassembly performance impact on js is real and where it is noise. It also shows you which boundary decisions pay the biggest dividends.

21 proven wins for shipping WASM in production

1. Profile first

Find the hot path before touching code. Do not move trivial work to WASM. The webassembly performance impact on js comes from targeted wins, not blanket rewrites.

2. Keep loops in WASM

Avoid per element calls across the boundary. Batch work. Process arrays inside WASM and return a compact result.

3. Flatten data

Use typed arrays and fixed layouts. Nested objects drag in conversions and GC overhead you do not need.

4. Pass pointers and lengths

Allocate inside WASM, write input once, then call one function. Read results by creating a view over the same buffer.

5. Convert strings at the edge

TextEncoder and TextDecoder near the boundary reduce churn. Keep internal processing in bytes.

6. Use SIMD where it fits

Pixel loops and vector math respond well to SIMD. Benchmark with realistic images and real filters.

7. Consider threads for parallel tasks

Partition work into chunks and avoid contention. Use Atomics for light coordination only where needed.

8. Stream and cache the module

Streaming compilation shortens time to ready. Cache the .wasm with long lifetimes. Serve with content hashing.

9. Lazy load near first use

Do not block initial content for a tool users may not trigger. Lazy load to protect LCP and INP.

10. Keep the JS glue small

Use a thin wrapper to handle allocation, pointers, and error paths. Fewer layers make it easier to reason about cost.

11. Track p95, not just p50

Tail latency hurts real users. Improve the long tail and you improve satisfaction and conversions.

12. Use Web Workers where appropriate

Move heavy WASM work off the main thread. Keep UI responsive while the computation runs.

13. Preload critical assets

Preload the LCP image and primary font. Faster first render improves perceived speed while you warm the WASM compile.

14. Compress images and cache aggressively

Use AVIF or WebP for photos, SVG for icons, and edge caching. Shaving bytes reduces network time and helps the webassembly performance impact on js show up sooner in the session.

15. Optimize build size

Strip symbols, remove panic messages, and turn on size optimizations. Smaller .wasm files ship faster.

16. Validate with E2E flows

Integrate your WASM function into a full click path. Measure before and after at the journey level, not just micro tasks.

17. Expose a simple API

A clean JS API reduces misuse and keeps interop patterns efficient. Think one call in, one result out.

18. Monitor errors and timeouts

Collect failure rates and timeouts. Fast code that fails under load does not help real users.

19. Document the boundary contract

Write down types, ownership, and lifetime rules. This avoids accidental copies and hidden growth in glue code.

20. Keep fallbacks ready

Provide a JS path for older environments or feature flags. Rollouts are smoother with a safe escape hatch.

21. Re profile after each change

Regression happens. Bake measurement into your process so you catch it early and keep the webassembly performance impact on js consistent.

Practical loading patterns with code

You can integrate WASM with modern bundlers or load it at runtime. Both patterns can be fast when you set headers and caching correctly.

// Streaming instantiate in the browser

const response = await fetch('/wasm/image_tools.wasm', { cache: 'force-cache' });

const { instance } = await WebAssembly.instantiateStreaming(response, imports);

window.imageTools = instance.exports;// Dynamic import with ESM

const mod = await import('./pkg/image_tools.js');

await mod.default('/wasm/image_tools_bg.wasm'); // path for the .wasm file

const result = mod.blur(ptr, len, radius);Keep your paths predictable. Serve with strong caching and respect content hashing. This makes repeated visits feel instant and highlights the webassembly performance impact on js during real tasks.

From speed to outcomes

Speed is a means to an end. Users care about faster results and fewer stalls. Conversions respond when key tasks feel smooth and instant.

Connect your performance gains to signups, orders, and retention. Tie improvements in INP and task durations to real business metrics so the work keeps getting funded.

If you need a hands on partner to wire up a hybrid stack that balances performance with maintainability, the website design and development team can help you plan the boundary and ship with confidence.

Governance and measurement at scale

You keep what you measure. Bake budgets and alerts into your CI so regressions never reach production. Track both micro and macro metrics weekly.

- Budgets for bundle size, .wasm size, and main thread time.

- Dashboards for p50, p75, and p95 task durations by route.

- Alerts when the tail crosses your thresholds for more than a day.

To integrate reporting with product goals, add analytics and reporting that shows performance alongside conversions and support tickets.

Common pitfalls that hide your gains

Several mistakes can erase the webassembly performance impact on js even when your core function is fast. Avoid these traps to protect your wins.

- Chatty interop: many small calls across the boundary.

- String heavy APIs: repeated encoding and decoding.

- Blocking the main thread: heavy work without Web Workers.

- Large .wasm with slow delivery: missing compression and caching.

- Benchmark only on desktop: mobile devices reveal real bottlenecks.

Fix these and your numbers will move the way you expect. Add a checklist to pull requests so good habits stick.

Image hygiene still matters

WASM does not fix slow images. Use AVIF or WebP, generate responsive sizes, and lazy load below the fold. Preload the LCP image and compress assets at build time.

These habits reduce network time and help the UI feel ready while heavy work warms up. They also keep Core Web Vitals healthy as you integrate WASM.

FAQs

Does WebAssembly always outperform JS?

No. For light logic and DOM work, JS is often faster and simpler. WASM shines on CPU bound tasks and when you minimize boundary costs.

Can I call DOM APIs from WebAssembly?

Not directly. You call DOM APIs through JS glue. This is why WASM is best for compute and JS for UI orchestration.

What languages should I compile to WASM?

Rust and C or C++ are common. Choose a language that fits your team and offers mature WASM tooling for memory and build size control.

How do I handle errors across the boundary?

Return error codes or structured results. Avoid throwing through the boundary. Keep error handling in JS where it is easier to log and report.

Will WASM GC help interop?

Garbage collected integration can reduce bridging overhead for managed languages. Still, flat data and typed arrays remain the safest path to predictable speed.

How do I keep bundle size under control?

Use size optimizations in your compiler, strip debug info, and avoid unused dependencies. Cache the .wasm with a long lifetime.

Can I use WASM in Node for server tasks?

Yes. Node supports WASM and works well for CPU bound work like image processing or crypto. Measure gains with the same process you use in the browser.

References

Ready to turn speed into growth

If this guide helped, subscribe, comment, or share it with your team. Have a question about your boundary design or benchmark plan

Email info@brandnexusstudios.co.za. If you want a partner from plan to launch, contact us and mention your top workload. Brand Nexus Studios can help you ship a hybrid stack that keeps JS delightful and makes WASM do the heavy lifting.